Background

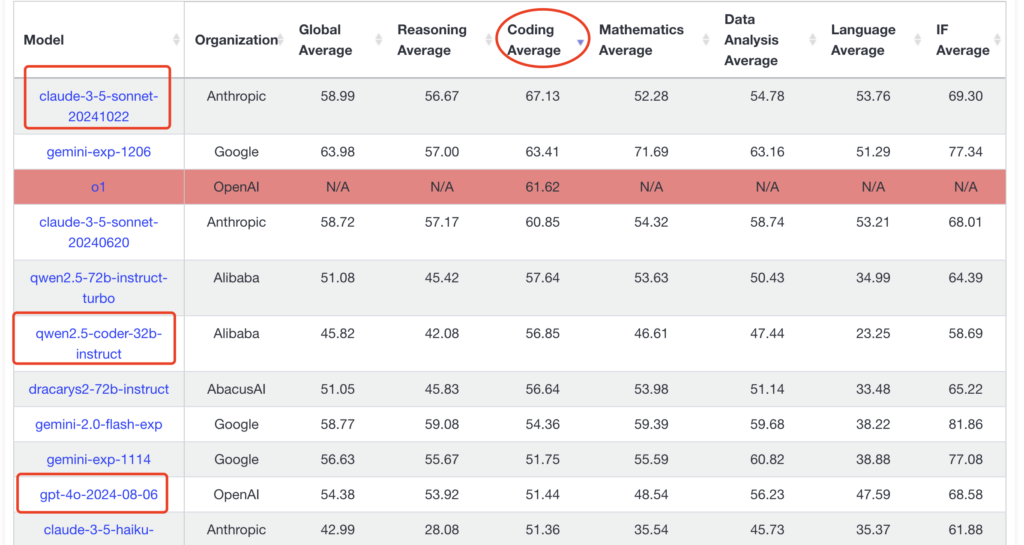

The field of large models is currently flooded with various options, such as OpenAI’s GPT series, Alibaba’s Tongyi, and Anthropic’s Claude series. From my perspective, I’ve already secured the official OpenAI API for general use and language processing. However, in coding, while OpenAI’s GPT-4o is usable, there’s still a pressing need for a Claude-sonnet series model. This is evident from the evaluations of various AI models:

As for using Claude directly, my situation in China presents challenges such as account restrictions and difficulties with credit card payments. This has made searching for a relay service a practical solution.

Introduction to Relay Service

Through online research, I found the Dream API (https://oneapi.paintbot.top/) as a relay site, which has two significant advantages:

- Comprehensive models, including OpenAI, Gemini, and Claude

- Affordability, with the official statement: Current exchange rate is 1¥ for 1$, making all models available at a rate that is more than seven times cheaper than the official pricing.

Here’s a screenshot of the supported model list:

Pain Points of the Relay Service

Finding a relay service is not difficult, but the downside is that most relay services are based on the open-source New-API. This means that all channels (OpenAI/Claude/Gemini) end up returning data in the OpenAI-compatible format. This issue has been discussed in the original program’s documentation and issue threads. You can find the issue here: https://github.com/Calcium-Ion/new-api/issues/578.

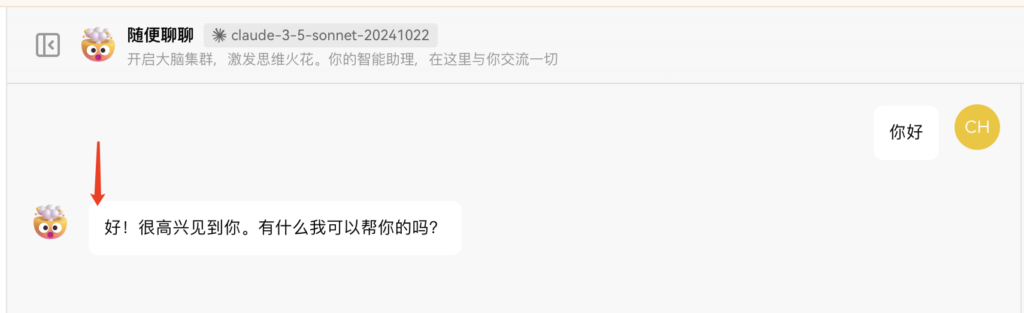

While this format conversion can be useful in some cases, it presents a significant problem for me: I cannot separate my OpenAI and Claude channels; they must be used as one. For instance, using the Claude model in the relay’s LobeChat client leads to the following result:

This is unacceptable for me, as I’ve mentioned that I have access to an OpenAI channel, and I simply want to use the Claude model from the relay service.

Solutions

Solution One (Unsuccessful):

The response in the issue suggested using a free yet closed-source software called new-api-horizon:

I attempted this solution, but the system seems designed for the Claude mode. When it encounters OpenAI’s compatible mode content, it can respond correctly, but it often drops the first 2-3 characters of each reply, rendering it unusable. A potential output example is below:

Solution Two:

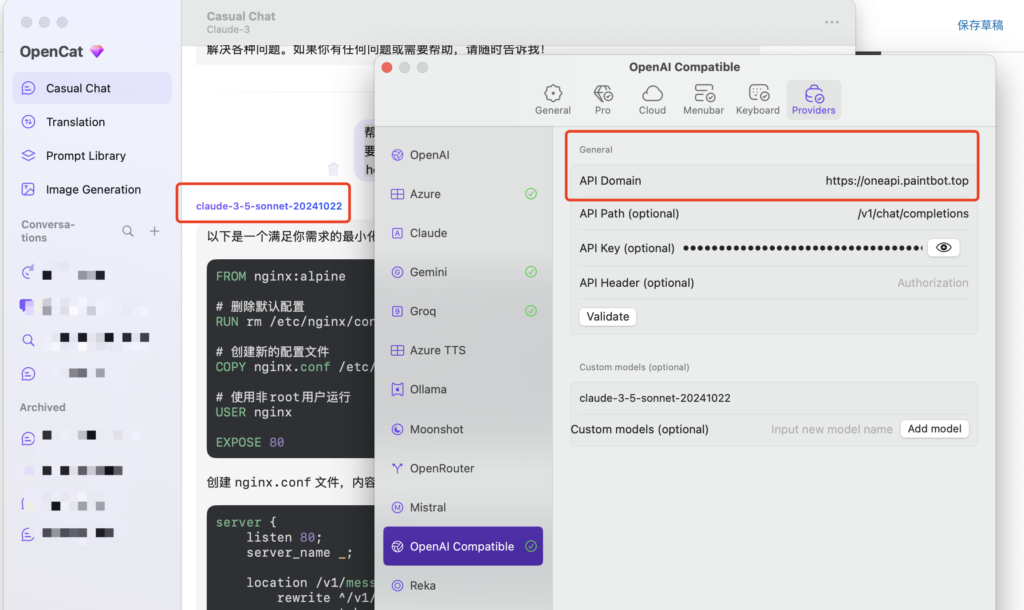

Using certain software, I can configure both OpenAI and OpenAI Compatible channels, which effectively meets my needs. For example, OpenCat software suffices:

Solution Three:

In fact, Solution Two pretty much meets my needs, but there are still a few pain points that remain unresolved:

- OpenCat is exclusive to MacOS/iOS and cannot be used on Windows

- It’s not possible to share it for third-party use (though unlikely)

- My go-to software is LobeChat; I rarely use OpenCat now

- Perfectionism…

After searching the internet, I found that many format converters have been created due to the popularity and market share of OpenAI, but they mostly convert other formats to OpenAI-compatible ones. Unfortunately, I couldn’t find any converters that do OpenAI to Claude. Left with no choice, I decided to write my own converter:

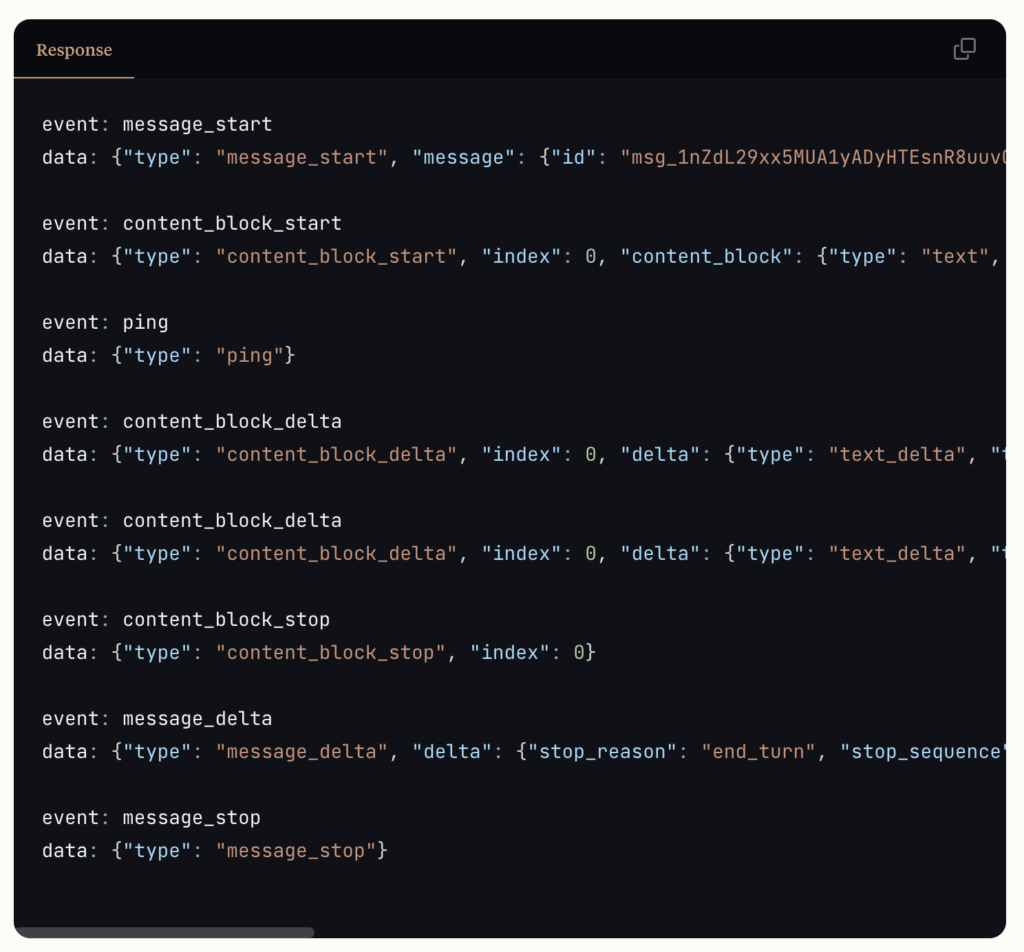

First, I referred to the Claude AI API documentation to see the streaming output format: https://docs.anthropic.com/en/api/messages-streaming

A basic example starts with a message_start event, followed by content_block_start and ping events, then proceeds with normal streaming outputs of content_block_delta, and finally sends an empty message_stop to conclude.

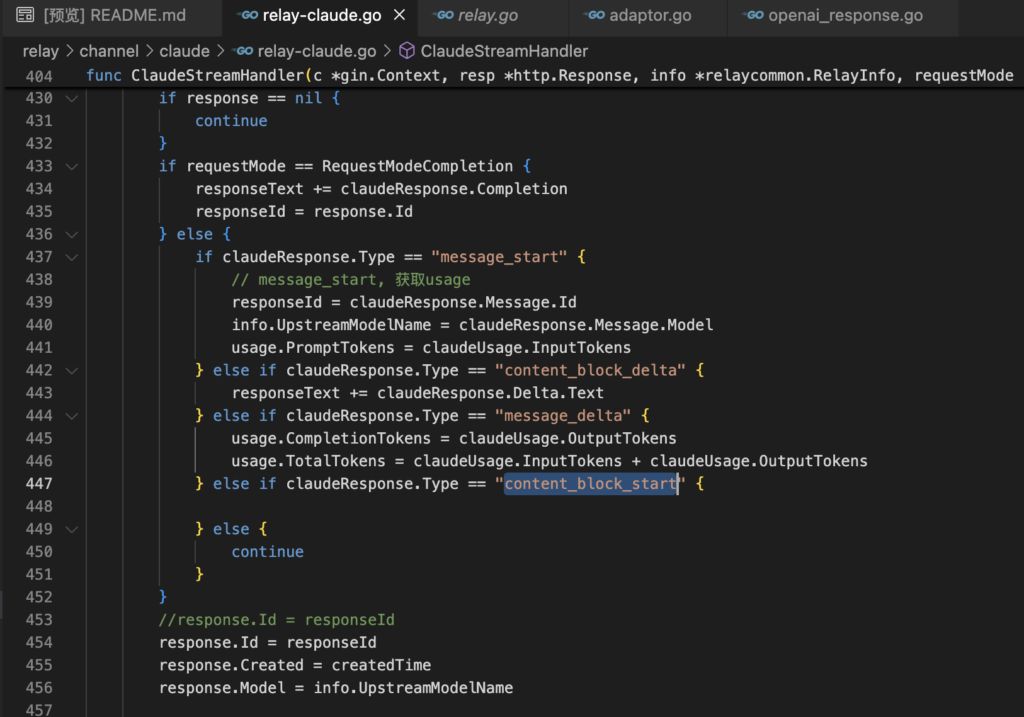

Then, I examined the source code of the New-API software (used by the relay service) to understand how it converts Claude’s responses to OpenAI’s compatible mode:

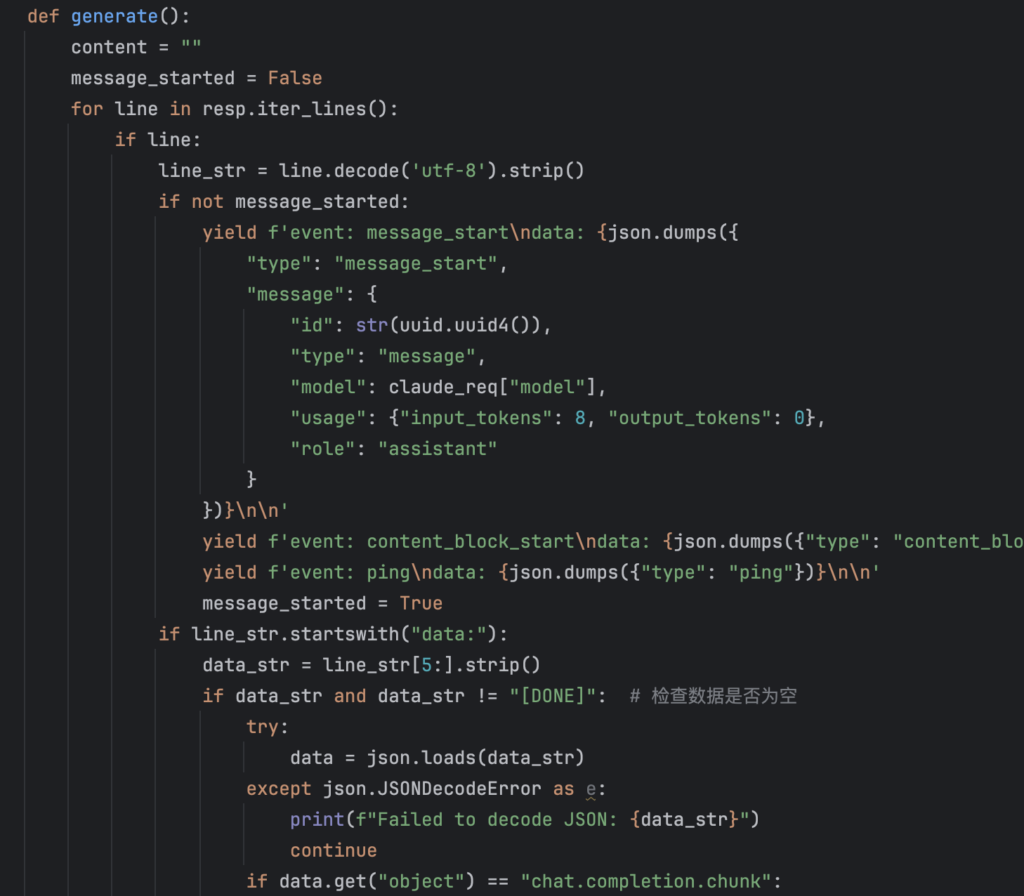

Next, I rewrote the code by following the format and conversion logic used by the relay service. The basic logic is that when the relay service sends its first response, I emit the message_start, content_block_start, and ping events to the client, then return the received content in order to the frontend, and finally output message_stop:

You can find the complete source code on my GitHub:

Conclusion

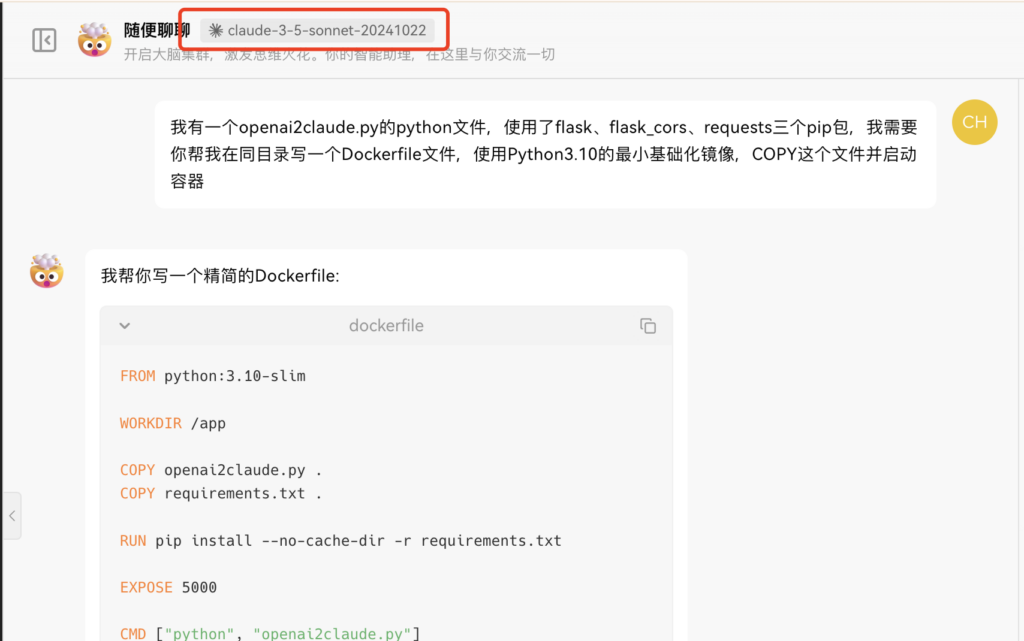

The final result is as follows:

Promotion

If you’re interested in this relay service, you can register using my referral code: https://oneapi.paintbot.top/register?aff=J57n

Note: Since this is not an official API, the group leader mentioned they would provide refunds even if they shut down, but please use your discretion.

“`

Leave a Reply